The History of the Microprocessor and the Personal Computer

The personal computing business as nosotros know information technology owes itself to an environment of enthusiasts, entrepreneurs and happenstance. Before PCs, the mainframe and minicomputer business model was formed around a unmarried company providing an entire ecosystem; from building the hardware, installation, maintenance, writing the software, and training operators.

This approach would serve its purpose in a world that seemingly required few computers. It fabricated the systems hugely expensive however highly lucrative for the companies involved since the initial toll and service contract ensured a steady stream of revenue. The "big atomic number 26" companies weren't the initial driving force in personal computing because of cost, lack of off-the-shelf software, perceived lack of need for individuals to own computers, and the generous profit margins afforded from mainframe and minicomputer contracts.

It was in this temper that personal computing began with hobbyists looking for artistic outlets not offered by their day jobs involving the monolithic systems. The invention of the microprocessor, DRAM, and EPROM integrated circuits would spark the widespread utilise of the BASIC loftier-level language variants, which would lead to the introduction of the GUI and bring computing to the mainstream. The resulting standardization and commoditization of hardware would finally make computing relatively affordable for the individual.

Over the next few weeks nosotros'll be taking an all-encompassing expect at the history of the microprocessor and the personal calculator, from the invention of the transistor to modern 24-hour interval chips powering a multitude of connected devices.

1947 - 1974: Foundations

Leading up to Intel'due south 4004, the first commercial microprocessor

Early personal calculating required enthusiasts to have skills in both electric component assembly (predominantly the ability to solder) and machine coding, since software at this time was a bespoke matter where it was available at all.

The established commercial marketplace leaders didn't have personal calculating seriously because of express input-output functionality and software, a famine of standardization, high user skill requirement, and few envisaged applications. Intel's own engineers had lobbied for the company to pursue a personal computing strategy almost as shortly every bit the 8080 started being implemented in a much wider range of products than originally foreseen. Steve Wozniak would plead with his employer, Hewlett-Packard, to do the same.

John Bardeen, William Shockley and Walter Brattain at Bell Labs, 1948.

While hobbyists initiated the personal computing phenomenon, the current situation is largely an extension of the lineage that began with work by Michael Faraday, Julius Lilienfeld, Boris Davydov, Russell Ohl, Karl Distraction-Horovitz, to William Shockley, Walter Brattain, John Bardeen, Robert Gibney, and Gerald Pearson, who co-developed the start transistor (a conjugation of transfer resistance) at Bell Phone Labs in December 1947.

Bell Labs would go along to be a prime mover in transistor advances (notably the Metal Oxide Semiconductor transistor, or MOSFET in 1959) but granted extensive licensing in 1952 to other companies to avoid anti-trust sanctions from the U.S. Department of Justice. Thus Bong and its manufacturing parent, Western Electric, were joined past forty companies including General Electric, RCA, and Texas Instruments in the speedily expanding semiconductor business organization. Shockley would leave Bell Labs and start Shockley Semi-Conductor in 1956.

The first transistor ever assembled, invented by Bell Labs in 1947

An excellent engineer, Shockley's caustic personality allied with his poor management of employees doomed the undertaking in brusque order. Inside a twelvemonth of assembling his research squad he had alienated plenty members to cause the mass exodus of "The Traitorous Eight", which included Robert Noyce and Gordon Moore, two of Intel's future founders, Jean Hoerni, inventor of the planar manufacturing procedure for transistors, and Jay Terminal. Members of The Eight would provide the nucleus of the new Fairchild Semiconductor sectionalization of Fairchild Camera and Instrument, a company that became the model for the Silicon Valley start-upwards.

Fairchild company direction would keep to increasingly marginalize the new division because of focus on profit from high profile transistor contracts such as those used in the IBM-built flying systems of the North American XB-70 Valkyrie strategic bomber, the Autonetics flying computer of the Minuteman ICBM system, CDC 6600 supercomputer, and NASA's Apollo Guidance Computer.

While hobbyists initiated the personal calculating phenomenon, the current situation is largely an extension of the lineage that began with work on early semiconductors in the late 1940s.

However, profit declined as Texas Instruments, National Semiconductor, and Motorola gained their share of contracts. By late 1967, Fairchild Semiconductor had get a shadow of its old cocky as budget cuts and cardinal personnel departures began to take hold. Prodigious R&D apprehending wasn't translating into commercial production, and combative factions within management proved counter-productive to the company.

The Traitorous 8 who quit Shockley to offset Fairchild Semiconductor. From left: Gordon Moore, Sheldon Roberts, Eugene Kleiner, Robert Noyce, Victor Grinich, Julius Blank, Jean Hoerni, Jay Concluding. (Photograph © Wayne Miller/Magnum)

Chief amidst those to leave would be Charles Sporck, who revitalized National Semiconductor, as well equally Gordon Moore and Robert Noyce. While over fifty new companies would trace their origins from the breakup of Fairchild'due south workforce, none achieved so much as the new Intel Corporation in such a brusque span. A unmarried phone call from Noyce to Arthur Rock, the venture capitalist, resulted in the $2.3 1000000 start-up funding existence raised in an afternoon.

The ease with which Intel was brought into existence was in large part due to the stature of Robert Noyce and Gordon Moore. Noyce is largely credited with the co-invention of the integrated circuit along with Texas Instrument's Jack Kilby, although he almost certainly borrowed very heavily from earlier work carried out by James Nall and Jay Lathrop'due south team at the U.Southward. Army's Diamond Ordnance Fuze Laboratory (DOFL), which produced the beginning transistor constructed using photolithography and evaporated aluminum interconnects in 1957-59, as well every bit Jay Last'due south integrated circuit team (including the newly acquired James Nall) at Fairchild, of which Robert Noyce was project chief.

Starting time planar IC (Photograph © Fairchild Semiconductor).

Moore and Noyce would accept with them from Fairchild the new self-aligned silicon gate MOS (metal oxide semiconductor) applied science suitable for manufacturing integrated excursion that had recently been pioneered by Federico Faggin, a loanee from a articulation venture between the Italian SGS and Fairchild companies. Building upon the work of John Sarace'southward Bell Labs team, Faggin would take his expertise to Intel upon condign a permanent U.S. resident.

Fairchild would rightly feel aggrieved over the revolt, every bit it would over many employee breakthroughs that ended up in the hands of others -- notably National Semiconductor. This brain drain was not quite as i sided as it would appear, since Fairchild's first microprocessor, the F8, in all likelihood traced its origins to Olimpia Werke's unrealized C3PF processor project.

In an era when patents had yet to assume the strategic importance they have today, time to market was of paramount importance and Fairchild was ofttimes likewise deadening in realizing the significance of its developments. The R&D partitioning became less product-orientated, devoting sizable resources to research projects.

Texas Instruments, the 2d largest integrated excursion producer, quickly eroded Fairchild's position equally market place leader. Fairchild all the same held a prominent standing in the manufacture, but internally, the direction construction was chaotic. Production quality balls (QA) was poor past industry standards with yields of xx% being mutual.

Over fifty new companies would trace their origins from the breakup of Fairchild's workforce; none achieved so much as the new Intel Corp in such a brusque span.

While engineering employee turnover increased equally "Fairchildren" left for more stable environments, Fairchild's Jerry Sanders moved from aerospace and defence marketing to overall director of marketing and unilaterally decided to launch a new product every week -- the "50-Two" plan. The accelerated time to market would doom many of these products to yields of around 1%. An estimated 90% of the products shipped after than scheduled, carried defects in blueprint specification, or both. Fairchild's star was near to be eclipsed.

If Gordon Moore and Robert Noyce's stature gave Intel a flight get-go as a company, the 3rd homo to join the team would become both the public face up of the visitor and its driving force. Andrew Grove, built-in András Gróf in Hungary in 1936, became Intel'due south Director of Operations despite having little background in manufacturing. The pick seemed perplexing on the surface -- even allowing for his friendship with Gordon Moore -- every bit Grove was an R&D scientist with a groundwork in chemistry at Fairchild and a lecturer at Berkeley with no experience in company management.

The fourth human being in the company would define its early on marketing strategy. Bob Graham was technically the third employee of Intel, but was required to give three months' notice to his employer. The delay in moving to Intel would let Andy Grove to learn a much larger management function than originally envisaged.

Intel's offset hundred employees pose outside the company's Mount View, California, headquarters, in 1969.

(Source: Intel / Associated Press)

An first-class salesman, Graham was seen as one of two outstanding candidates for the Intel management team -- the other, W. Jerry Sanders Three, was a personal friend of Robert Noyce. Sanders was i of the few Fairchild management executives to retain their jobs in the wake of C. Lester Hogan's appointment as CEO (from an irate Motorola).

Sanders' initial confidence at remaining Fairchild'south top marketing man evaporated quickly as Hogan grew unimpressed with Sanders' flamboyancy and his team'due south unwillingness to accept pocket-sized contracts ($1 million or less). Hogan effectively demoted Sanders twice in a thing of weeks with the successive promotions of Joseph Van Poppelen and Douglas J. O'Conner higher up him. The demotions achieved what Hogan had intended -- Jerry Sanders resigned and nigh of Fairchild's key positions were occupied by Hogan's former Motorola executives.

Within weeks Jerry Sanders had been approached by iv other ex-Fairchild employees from the analog division interested in starting upward their own business. As originally conceived by the four, the company would produce analog circuits since the breakup (or meltdown) of Fairchild was fostering a vast number of start-ups looking to cash in on the digital circuit craze. Sanders joined on the understanding that the new company would also pursue digital circuits. The team would have eight members, including Sanders, Ed Turney, one of Fairchild's best salesmen, John Carey, and chip designer Sven Simonssen as well equally the original four analog division members, Jack Gifford, Frank Botte, Jim Giles, and Larry Stenger.

Advanced Micro Devices, as the company would be known, got off to a rocky start. Intel had secured funding in less than a day based on the company being formed past engineers, but investors were much more reticent when faced with a semiconductor business concern proposal headed by marketing executives. The first stop in securing AMD's initial $1.75 million uppercase was Arthur Rock who had supplied funding for both Fairchild Semiconductor and Intel. Stone declined to invest, equally would a succession of possible money sources.

Eventually, Tom Skornia, AMD's newly minted legal representative arrived at Robert Noyce's door. Intel'south co-founder would thus become one of the founding investors in AMD. Noyce'south name on the investor list added a caste of legitimacy to the business vision that AMD had and then far lacked in the eyes of possible investors. Further funding followed, with the revised $1.55 one thousand thousand target reached just before the close of business on June 20, 1969.

AMD got off to a rocky start. Only Intel's Robert Noyce becoming ane of the company'southward founding investors added a caste of legitimacy to its business vision in the eyes of possible investors.

Intel'southward germination was somewhat more straightforward allowing the company to get straight to business organisation in one case funding and premises were secured. Its first commercial product was also one of the five notable industry "firsts" accomplished in less than three years that were to revolutionize both the semiconductor industry and the confront of computing.

Honeywell, i of the computer vendors that lived within IBM's vast shadow, approached numerous chip companies with a request for a 64-fleck static RAM flake.

Intel had already formed two groups for chip manufacture, a MOS transistor team led past Les Vadász, and a bipolar transistor team led by Dick Bohn. The bipolar squad was starting time to achieve the goal, and the world'due south kickoff 64-scrap SRAM bit was handed over to Honeywell in Apr 1969 past its chief designer, H.T. Chua. Existence able to produce a successful offset up design for a million dollar contract would simply add to Intel's early on manufacture reputation.

Intel's first production, a 64-fleck SRAM based on the newly developed Schottky Bipolar technology. (CPU-Zone)

In keeping with the naming conventions of the day, the SRAM scrap was marketed under its part number, 3101. Intel, along with virtually all chipmakers of the time did not market their products to consumers, but to engineers within companies. Office numbers, peculiarly if they had significance such as the transistor count, were deemed to entreatment more than to their prospective clients. As well, giving the product an actual proper name could signify that the proper name masked applied science deficiencies or a lack of substance. Intel tended to only move abroad from numerical office naming when it became painfully apparent that numbers couldn't be copyrighted.

While the bipolar team provided the first breakout production for Intel, the MOS team identified the principal culprit in its own chips failings. The silicon-gate MOS procedure required numerous heating and cooling cycles during scrap manufacturing. These cycles caused variations in the expansion and contraction charge per unit between the silicon and the metal oxide, which led to cracks that bankrupt circuits in the flake. Gordon Moore's solution was to "dope" the metal oxide with impurities to lower its melting point assuasive the oxide to flow with the circadian heating. The resulting chip that arrived in July 1969 from the MOS team (and an extension of the work washed at Fairchild on the 3708 chip) became the beginning commercial MOS memory chip, the 256-bit 1101.

Honeywell quickly signed up for a successor to the 3101, dubbed the 1102, only early in its development a parallel project, the 1103, headed by Vadász with Bob Abbott, John Reed and Joel Karp (who as well oversaw the 1102'south evolution) showed considerable potential. Both were based on a three-transistor memory prison cell proposed by Honeywell's William Regitz that promised much higher prison cell density and lower manufacturing cost. The downside was that the memory wouldn't retain information in an unpowered country and the circuits would need to have voltage applied (refreshed) every two milliseconds.

The first MOS retentiveness chip, Intel 1101, and kickoff DRAM memory chip, Intel 1103. (CPU-Zone)

At the fourth dimension, computer random access memory was the province of magnetic-cadre memory chips. This technology was rendered all but obsolete with the inflow of Intel's 1103 DRAM (dynamic random access memory) chip in Oct 1970, and by the fourth dimension manufacturing bugs were worked out early next year, Intel had a sizeable lead in a dominant and fast growing market -- a lead it benefited from until Japanese memory makers caused a abrupt decline in retentiveness prices at the start of the 1980'due south due to massive infusions of uppercase into manufacturing capacity.

Intel launched a nationwide marketing campaign inviting magnetic-core retention users to phone Intel collect and have their expenditure on organization memory slashed past switching to DRAM. Inevitably, customers would enquire about second source supply of the chips in an era where yields and supply couldn't exist taken for granted.

Andy Grove was vehemently opposed to second sourcing, merely such was Intel's status as a young visitor that had to accede to industry demand. Intel chose a Canadian company, Microsystems International Express every bit its first second source of chip supply rather than a larger, more experienced company that could boss Intel with its own product. Intel would gain around $1 million from the license understanding and would proceeds farther when MIL attempted to heave profits past increasing wafer sizes (from 2 inches to three) and shrinking the chip. MIL's customers turned to Intel as the Canadian firm's chips came off the assembly line defective.

Intel launched a nationwide marketing campaign inviting magnetic-cadre memory users to telephone Intel collect and have their expenditure on system retentivity slashed by switching to DRAM.

Intel's initial feel wasn't indicative of the industry as a whole, nor its own later issues with second sourcing. AMD's growth was directly aided by condign a second source for Fairchild'south 9300 series TTL (Transistor-Transistor Logic) chips and securing, designing, and delivering a custom chip for Westinghouse'south military division that Texas Instruments (the initial contractor) had difficulty producing on time.

Intel'south early fabrication failings using the silicon gate process likewise led to the third and most immediately profitable chip as well as an manufacture atomic number 82 in yields. Intel assigned another ex-Fairchild alumnus, the immature physicist Dov Frohmann, to investigate the process issues. What Frohmann surmised was that the gates of some of the transistors had become disconnected, floating above and encased within the oxide separating them from their electrodes.

Frohmann as well demonstrated to Gordon Moore that these floating gates could hold an electrical charge considering of the surrounding insulator (in some cases many decades), and could thus be programmed. In addition, the floating gate electrical charge could be prodigal with ionizing ultra violet radiation which would erase the programming.

Conventional retention required the programming circuits to exist laid down during the chip's manufacturer with fuses built into the design for variations in programming. This method is costly on a small scale, needs many different chips to arrange individual purposes and requires chip amending when redesigning or revising the circuits.

The EPROM (Erasable, Programmable Read-But Retention) revolutionized the technology, making retention programming much more accessible and many times faster since the client did not take to wait for their application specific chips to be manufactured.

The downside of this technology was that in order for the UV calorie-free to erase the flake, a relatively expensive quartz window was incorporated in the chip packaging directly to a higher place the ROM fleck to let light access. The high cost would later on be eased by the introduction of one-time programmable (OTP) EPROMs that did away with the quartz window (and erase function), and electrically erasable, programmable ROMs (EEPROM).

Equally with the 3101, initial yields were very poor -- less than 1% for the most part. The 1702 EPROM required a precise voltage for retention writes. Variances in manufacturing translated into an inconsistent write voltage requirement -- as well little voltage and the programming would be incomplete, too much risked destroying the chip. Joe Friedrich, recently lured abroad from Philco, and some other who had honed their craft at Fairchild, striking upon passing a high negative voltage beyond the chips before writing data. Friedrich named the process "walking out" and it would increment yields from one chip every ii wafers to sixty per wafer.

Intel 1702, the first EPROM flake. (computermuseum.li)

Because the walk out did not physically change the chip, other manufacturers selling Intel-designed ICs would not immediately notice the reason for Intel's leap in yields. These increased yields directly impacted Intel'south fortunes as revenue climbed 600% between 1971 and 1973. The yields, stellar in comparison to the 2d source companies conferred a marked advantage for Intel over the aforementioned parts being sold past AMD, National Semiconductor, Sigtronics, and MIL.

ROM and DRAM were two essential components of a organisation that would become a milestone in the development of personal calculating. In 1969, the Nihon Calculating Machine Corporation (NCM) approached Intel desiring a twelve-chip system for a new desktop figurer. Intel at this stage was in the process of developing its SRAM, DRAM, and EPROM chips and was eager to obtain its initial business contracts.

NCM'south original proposal outlined a arrangement requiring 8 chips specific to the calculator just Intel's Ted Hoff striking upon the idea of borrowing from the larger minicomputers of the twenty-four hour period. Rather than private chips handling individual tasks, the thought was to make a chip that tackled combined workloads, turning the individual tasks into sub-routines as the larger computers did -- a general-purpose bit. Hoff'southward thought would reduce the number of chips needed to just four -- a shift register for input-output, a ROM flake, a RAM chip, and the new processor fleck.

NCM and Intel signed the contract for the new organisation on February 6, 1970, and Intel received an advance of $60,000 against a minimum order of 60,000 kits (with eight chips per kit minimum) over three years. The job to bring the processor and its three support chips to fruition would exist entrusted to another disaffected Fairchild employee.

Federico Faggin grew disillusioned with both Fairchild's inability to interpret its R&D breakthroughs into tangible products before being exploited by rivals and his own continued position as a manufacturing process engineer, while his main interest lay first in flake architecture. Contacting Les Vadász at Intel, he was invited to head a design project with no more foreknowledge than its description every bit "challenging". Faggin was to find out what the four-scrap MCS-4 projection entailed on April three, 1970, his offset day of work, when he was briefed by engineer Stan Mazor. The next day Faggin was thrown into the deep finish, meeting with Masatoshi Shima, NCM's representative, who expected to come across the logic blueprint of the processor rather than hear an outline from a human who had been on the project less than a day.

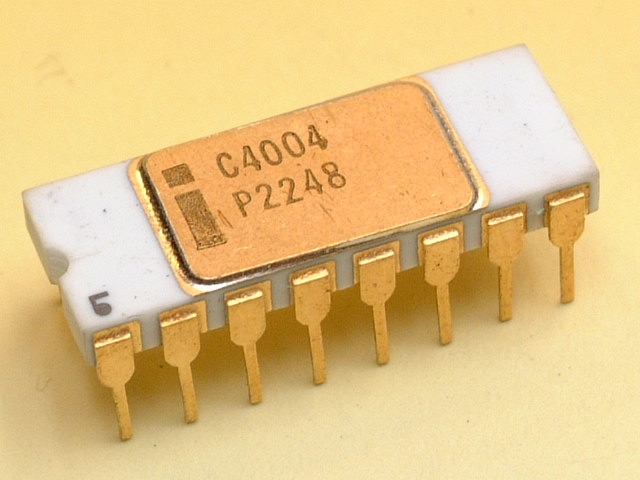

Intel 4004, the first commercial microprocessor, had 2300 transistors and ran at a clock speed of 740KHz. (CPU-Zone)

Faggin's team, which now included Shima for the elapsing of the design stage, quickly set virtually developing the 4 fries. Design for the simplest of them, the 4001 was completed in a week with the layout taking a single draftsman a calendar month to consummate. By May, the 4002 and 4003 had been designed and work started on the microprocessor 4004. The first pre-production run came off the associates line in December but considering the vital buried contact layer was omitted from fabrication they were rendered non-working. A second revision corrected the mistake and three weeks subsequently all four working chips were gear up for the exam phase.

The 4004 might have been a footnote in semiconductor history if it had remained a custom part for NCM, but falling prices for consumer electronics, specially in the competitive desktop calculator market, caused NCM to approach Intel and ask for a reduction in unit pricing from the agreed contract. Armed with the knowledge that the 4004 could have many further applications, Bob Noyce proposed a price cutting and a refund of NCM's $60,000 advance payment in exchange for Intel being able to market the 4004 to other customers in markets other than calculators. Thus the 4004 became the first commercial microprocessor.

Two other designs of the era were proprietary to whole systems; Garrett AiResearch'southward MP944 was a component of the Grumman F-xiv Tomcat's Central Air Information Computer, which was responsible for optimizing the fighter'south variable geometry wings and glove vanes, while Texas Instruments' TMS 0100 and 1000 were initially only bachelor as a component of handheld calculators such as the Bowmar 901B.

The 4004 might take been a footnote in semiconductor history if it had remained a custom part for NCM.

While the 4004 and MP944 required a number of support chips (ROM, RAM, and I/O), the Texas Instruments scrap combined these functions into a CPU -- the globe's offset microcontroller, or "computer-on-a-chip" as it was marketed at the fourth dimension.

Within the Intel 4004

Texas Instruments and Intel would enter into a cross-license involving logic, process, microprocessor, and microcontroller IP in 1971 (and once more in 1976) that would herald an era of cross-licensing, joint ventures, and the patent as a commercial weapon.

Completion of the NCM (Busicom) MCS-iv organization freed up resources for a continuation of a more aggressive project whose origins pre-dated the 4004 design. In late 1969, flush with cash from its initial IPO, Computer Terminal Corporation (CTC, afterwards Datapoint) contacted both Intel and Texas Instruments with a requirement for an 8-bit terminal controller.

Texas Instruments dropped out fairly early on, and Intel's 1201 project development, started in March 1970, had stalled by July as project head Hal Feeney was co-opted onto a static RAM fleck project. CTC would eventually opt for a then simpler discrete collection of TTL chips every bit deadlines approached. The 1201 project would languish until interest was shown from Seiko for usage in a desktop calculator and Faggin had the 4004 up and running in Jan 1971.

In today'southward environment information technology seems near incomprehensible that microprocessors development should play 2nd fiddle to memory, just in the late 1960s and early 1970s computing was the province of mainframes and minicomputers.

In today's environment it seems almost incomprehensible that microprocessor development should play second fiddle to memory, but in the late 1960s and early 1970s computing was the province of mainframes and minicomputers. Less than twenty,000 mainframes were sold in the world yearly and IBM dominated this relatively small market (to a lesser extent UNIVAC, GE, NCR, CDC, RCA, Burroughs, and Honeywell -- the "Seven Dwarfs" to IBM's "Snow White"). Meanwhile, Digital Equipment Corporation (DEC) effectively owned the minicomputer market. Intel management and other microprocessor companies, couldn't see their chips usurping the mainframe and minicomputer whereas new memory chips could service these sectors in vast quantities.

The 1201 duly arrived in April 1972 with its proper noun inverse to 8008 to indicate that information technology was a follow on from the 4004. The fleck enjoyed reasonable success but was handicapped by its reliance on 18-pin packaging which express its input-output (I/O) and external bus options. Being relatively slow and nevertheless using programming past the showtime assembly language and machine code, the 8008 was still a far weep from the usability of modern CPUs, although the recent launch and commercialization of IBM'southward 23FD eight-inch floppy disc would add impetus to the microprocessor market in the next few years.

Intellec 8 evolution system (computinghistory.org.great britain)

Intel's push for wider adoption resulted in the 4004 and 8008 being incorporated in the company's get-go development systems, the Intellec four and Intellec viii -- the latter of which would effigy prominently into the development of the kickoff microprocessor-orientated operating system -- a major "what if" moment in both industries as well as Intel's history. Feedback from users, potential customers, and the growing complexity of calculator-based processors resulted in the 8008 evolving into the 8080, which finally kick-started personal figurer development.

This article is the first installment on a series of 5. If you lot enjoyed this, read on equally delve into the birth of the first personal computer companies. Or if you feel like reading more about the history of computing, bank check out our amazing serial on the history of reckoner graphics.

Source: https://www.techspot.com/article/874-history-of-the-personal-computer/

Posted by: patersonfrok1965.blogspot.com

0 Response to "The History of the Microprocessor and the Personal Computer"

Post a Comment